I asked AI what I should worry about regarding AI, and here's what it told me.

- Dan Connors

- Aug 14, 2025

- 5 min read

AI will probably most likely lead to the end of the world, but in the meantime, there'll be great companies.

Sam Altman- CEO of Open AI

Deep-learning will transform every single industry. Healthcare and transportation will be transformed by deep-learning. I want to live in an AI-powered society. When anyone goes to see a doctor, I want AI to help that doctor provide higher quality and lower cost medical service. I want every five-year-old to have a personalised tutor.

Andrew Ng- founder of Google brain project and AI entrepreneur

What all of us have to do is to make sure we are using AI in a way that is for the benefit of humanity, not to the detriment of humanity.

Tim Cook- CEO of Apple

Does anybody really understand what's going on with artificial intelligence? Corporations? Politicians? Governments? We've hears claims that it will be a huge boost to human knowledge and productivity, but also claims that it will take over and wipe out civilization. Is anybody out there working on the implications of all this machine learning?

Since 2023 AI programs have made their way onto search engines, computer apps, and seemingly everywhere. Data centers full of powerful computers are springing up everywhere, with over 5,400 in use as I write this. Almost all of them have been built in the last few years to accommodate the rapid rise of AI.

We've had computer algorithms for decades that have guided our decisions, but AI is much more powerful and consequential. Algorithms are a specific set of instructions that tells the computer how to do a task. They've been used by industry to improve productivity and by social media companies to drive engagement. They can be tweaked but the true control still rests with the programmers.

AI is a different animal. These most-powerful computers have been fed as much information about humans and our world as possible and and allowed to play with it in unimagined ways. They are able to think on their own and come up with new ideas just like a human brain can. Generative AI uses old information to synthesize new things, including books, music, artwork, videos, and policies. We tend to put computer science on a pedestal, but the term garbage in- garbage out seems to apply here on another level. AI programs can be just as fallible as the humans they try to imitate.

I asked an AI program, Google Gemini, what I should be worried about and here are some of its suggestions.

1- This is the number one danger of AI, and I'll use Google's own words here:

"Humans need to be most careful about the potential for artificial intelligence to perpetuate and amplify existing societal biases. This is because AI systems learn from the data they're trained on, which often reflects the historical prejudices and inequalities present in society. When these biased datasets are used to train AI, the resulting algorithms can make discriminatory decisions with far-reaching consequences.

Algorithmic bias is a significant risk of AI. It occurs when an AI system's output is systematically unfair to certain groups. This isn't because the AI is intentionally malicious, but because the data it was trained on was flawed or unrepresentative. For example, a facial recognition system trained predominantly on data from lighter-skinned individuals may have a higher error rate when identifying people with darker skin tones. Similarly, a hiring algorithm trained on historical data from a company that has a history of favoring male applicants might continue to favor male candidates, reinforcing the original bias."

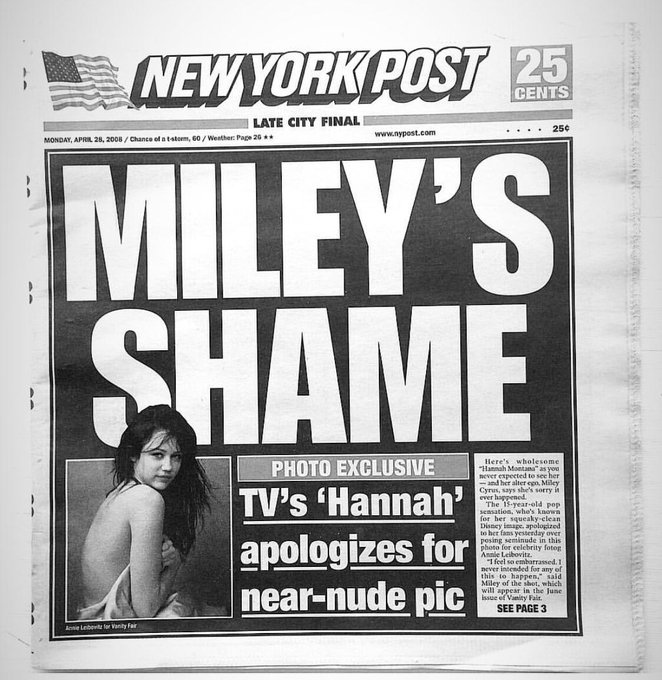

2- Misinformation and manipulation. (My own words from now on) AI is only as ethical as the people using it. It can now be used to create deepfakes and altered images or videos that can fuel conspiracy theories or attacks on reality. It's capable of creating fake documents including high school essays, scientific papers, medical records, financial reports, and more. Fraud is a huge concern with AI tools in unscrupulous hands. Detecting it will be a growing challenge as the programs get more sophisticated.

3- Replacing humans in their jobs. As AI grows in popularity, there is a strong likelihood that it will replace many professions, and those that remain will be tethered to AI programs that do much of their work for them. Advanced robotics can mimic most manual tasks, and AI can do most of the thinking. For centuries, jobs and careers were what defined us as humans. What will we get to replace that (not to mention our livelihoods) if the machines make us obsolete?

4- Over-reliance could lead to cognitive skill decline. If AI does most of the work, why would humans ever need to do the hard work of learning, assimilating, and thinking.? Students and teachers are already turning to AI to make their work easier. But doing the hard work of mental problem-solving is the only way to strengthen the brain and provide a backstop in case AI fails. While some humans are just plain lazy, others may take on more than they can cognitively handle and depend on AI to help out. Without the ability to problem-solve in unique situations, humanity loses control over their very existence and becomes a slave to AI.

These are just the highlights. There are many more concerns that both Google and I have detected. The AI revolution is like a freight train right now- there's no stopping it. There are many benefits that I hope come to pass, and it's not like humans alone have figured things out so great up to now. If AI can help us solve climate change, that alone may make it worth it. But AI is like any tool- only as good as the people who control it. And the folks in Silicon Valley are not exactly looking out for the planet's welfare- they're in it for the money.

Here are some more homework questions for all of us as we get deeper into this AI revolution:

How much water and energy do these data centers consume? Some communities are worried, especially when an energy transition is supposed to be ongoing.

Who is in control of these AI systems, and can the machines ever outsmart them and take over?

How does artificial intelligence affect our privacy? AI can outsmart captcha blocks, but can they hack our personal data and use it against us?

How safe are driverless cars and trucks? Really?

Can we trust AI chatbots with our children? Are chatbots going to replace human interaction?

How will this affect the economy? Will more concentrated brainpower result in more concentrated wealth as well? And can systems like this crash the stock market before any human can prevent it?

AI can't experience emotions, empathy, or love. Will its decisions align with the complex needs of humanity?

There are a lot of dire predictions out there about artificial intelligence, and it's hard to find a sober discussion without a lot of misinformation, hysteria, or hyperbole. Somehow we need to confront this new technology with guardrails and guidelines. That's on us and the leaders we put in charge. It may well bring great progress, productivity and discoveries, or it may bring problems that we can barely imagine now. But we can't ignore it.

Here is one of the more sober discussions, on one of my favorite shows, 60 Minutes.

Comments